PanoDR: Spherical Panorama Diminished Reality for Indoor Scenes

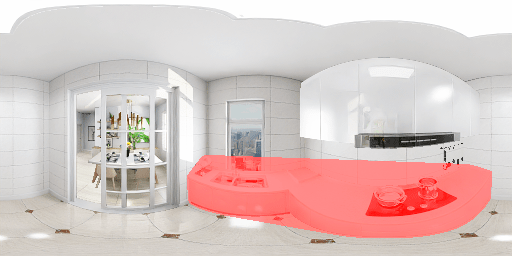

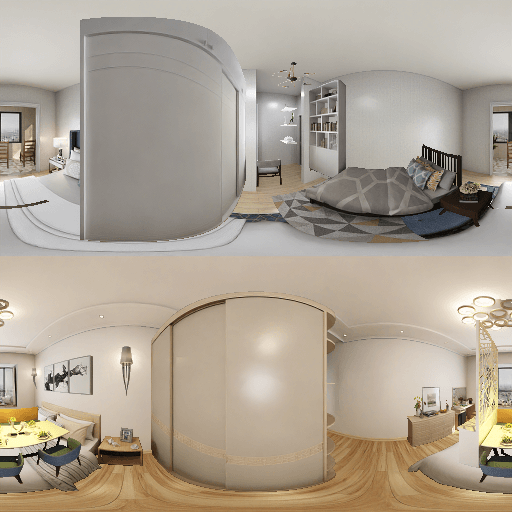

Diminishing the highlighted (red mask) object in indoor spherical panorama images. White lines annotate the scene’s layout in panorama and perspective views. Left to right: i) masked object to remove, ii) pure inpainting result of state-of-the-art methods (top row: RFR, bottom row: PICNet), iii) perspective view of inpainted region by these methods better shows that they do not necessarily respect the scene’s structural layout, iv) our panorama inpainting that takes a step towards preserving the structural reality, v) perspective view of inpainted region by our model, showing superior results both in texture generation and layout preservation. The results in this figure depict cases where RFR and PICNet provide reasonable structural coherency and aim at showcasing our model’s finer-grained accuracy.

Abstract

The rising availability of commercial 360° cameras that democratize indoor scanning, has increased the interest for novel applications, such as interior space re-design. Diminished Reality (DR) fulfills the requirement of such applications, to remove existing objects in the scene, essentially translating this to a counterfactual inpainting task. While recent advances in data-driven inpainting have shown significant progress in generating realistic samples, they are not constrained to produce results with reality mapped structures. To preserve the ‘reality’ in indoor (re-)planning applications, the scene’s structure preservation is crucial. To ensure structure-aware counterfactual inpainting, we propose a model that initially predicts the structure of a indoor scene and then uses it to guide the reconstruction of an empty – background only – representation of the same scene. We train and compare against other state-of-the-art methods on a version of the Structured3D dataset [1] modified for DR, showing superior results in both quantitative metrics and qualitative results, but more interestingly, our approach exhibits a much faster convergence rate.

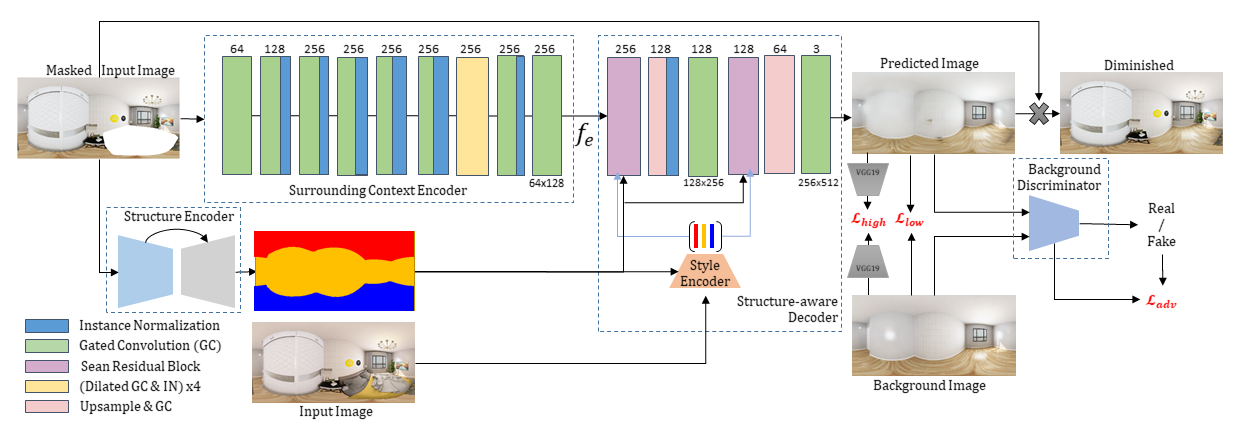

PanoDR Model Architecture

The input masked image is encoded twice, once densely by the structure UNet encoder outputing a layout segmentation map, and once by the surrounding context encoder, capturing the scene’s context while taking the mask into account via a series gated convolutions. These are then combined by the structure-aware decoder with a set of per layout component style codes that are extracted from the complete input image. Two SEAN residual blocks ensure the structural alignment of the reconstructed background image that is supervised by low- and high-level losses, as well as an adversarial loss driven by the background image discriminator. The final diminished result is created via compositing the predicted and input images using the diminishing mask.

Dataset

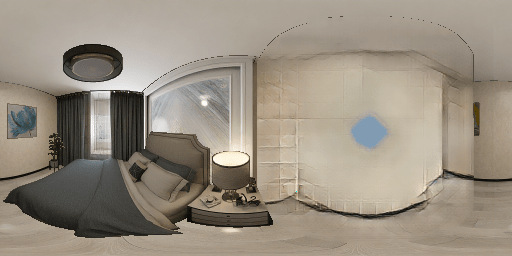

Structured3D provides 3 different room configurations, empty, simple and full, which,in theory, enables this dataset for Diminished Reality applications. In practice, this statement doesn’t hold since the rooms are rendered with ray-tracing, fact that affects the global illumination and texture, so replacing a region from the full configuration with the corresponding empty one does not create photo-consistent results.

|

|

| Full room configuration with annotated the object for diminsing | Full room configuration with replaced region from empty room configuration |

It is obvious that the diminished scene has large photometric insconsistency at the diminished region, which is not suitable for deep learning algorithms. To overcome this barrier, we start to augment the empty rooms with objects from the corresponding full room configurations, so that replacing a region is photo-consistent.

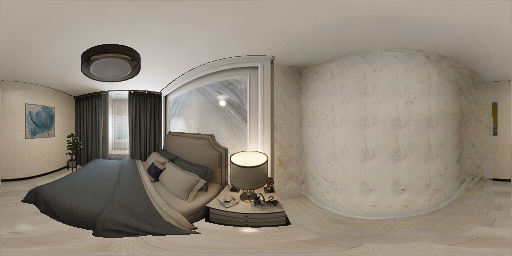

|

|

| Empty room configuration with augmented objects | Augmented room with diminished table |

The only issue of this approach of creating samples is the abscence of shadows, which makes the whole scene less realistic, but still the gain of this method is greater for Diminished Reality applications.

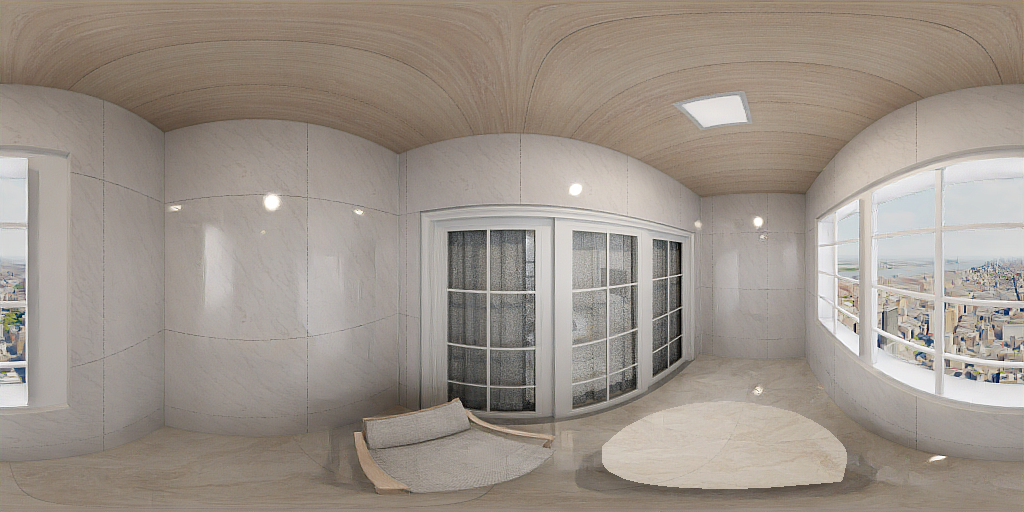

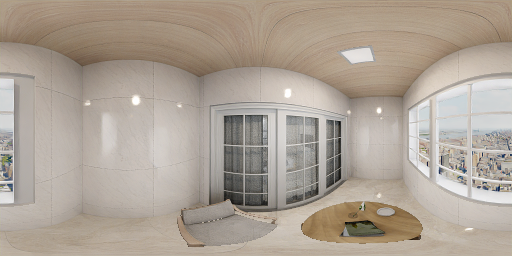

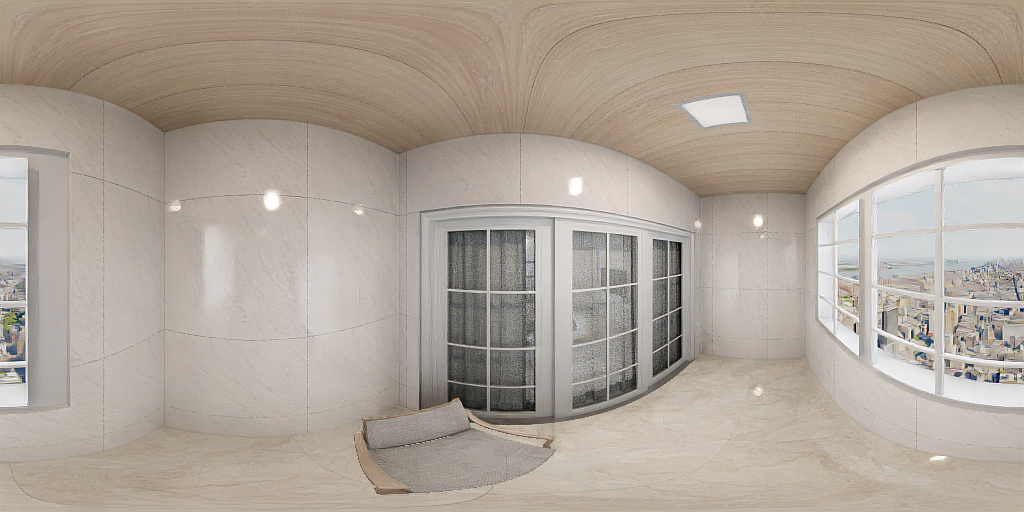

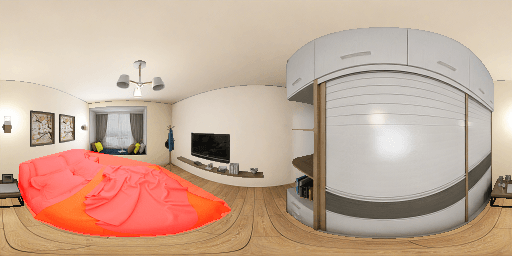

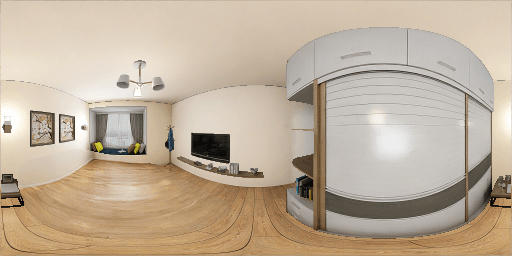

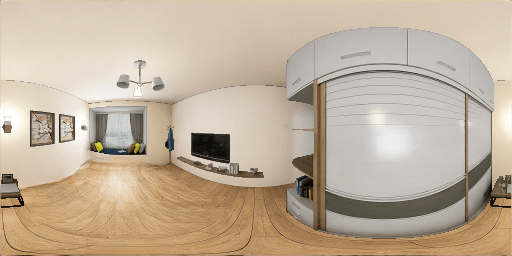

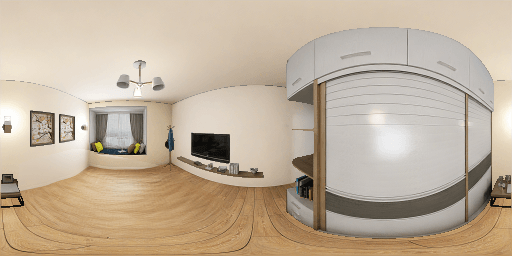

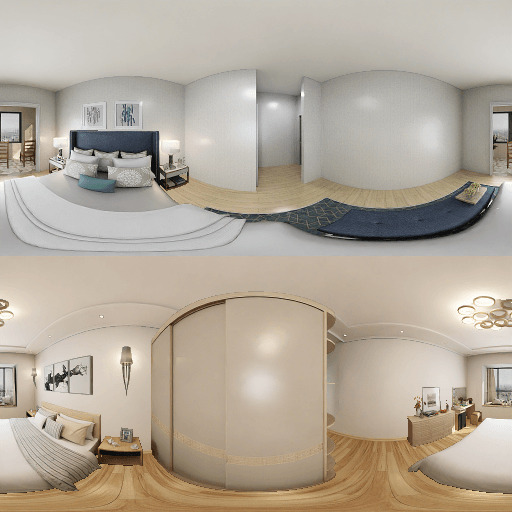

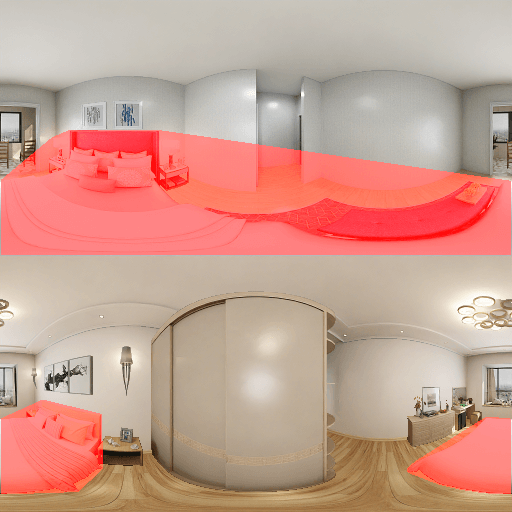

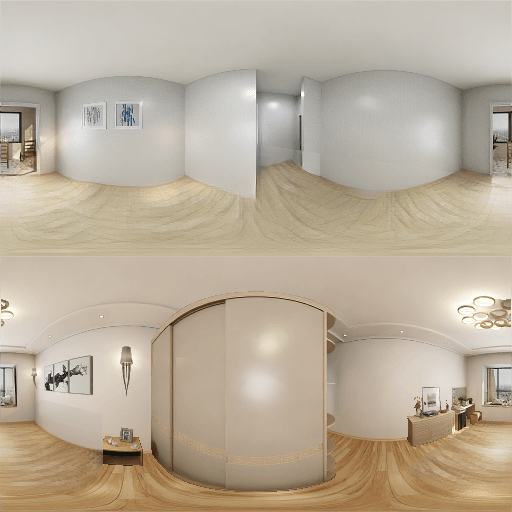

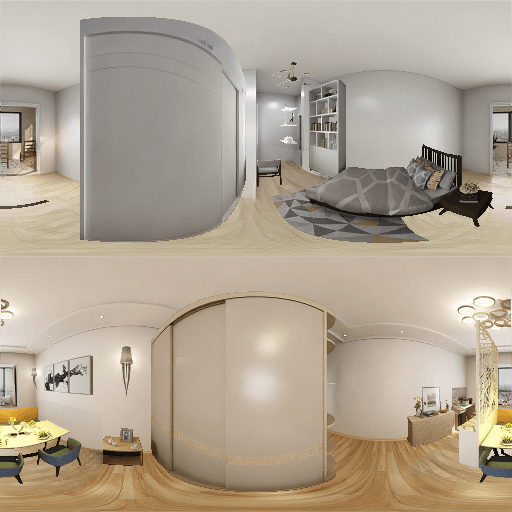

Qualitative results for diminishing objects from scenes in our test set.

From Left to right: i) Input panorama with the diminished area masked with transparent red, ii) RFR, iii) PICNet and iv) ours.

Qualitative results for diminishing objects in real-world scenes.

AR Application

A demonstration of our method, which levitates AR/DR applications. From left to right: i) original panorama ii) augmented reality without diminished reality iii) highlighted object for removal iv) our inpainting method result v) augmented reality with diminished reality, the result is much more natural.

Web Application with Streamlit

Citation

If you use this code for your research, please cite the following:

@inproceedings{gkitsas2021panodr,

title={PanoDR: Spherical Panorama Diminished Reality for Indoor Scenes},

author={Gkitsas, Vasileios and Sterzentsenko, Vladimiros and Zioulis, Nikolaos and Albanis, Georgios and Zarpalas, Dimitrios},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={3716--3726},

year={2021}

}

Acknowledgements

This project has received funding from the European Union’s Horizon 2020 innovation programme ATLANTIS under grant agreement No 951900.

Our code borrows from SEAN and deepfillv2. We thank Usability Partners for providing us images for qualitative results.

References

[1] Zheng, J., Zhang, J., Li, J., Tang, R., Gao, S. and Zhou, Z., 2020. Structured3d: A large photo-realistic dataset for structured 3d modeling. In 16th European Conference on Computer Vision, ECCV 2020 (pp. 519-535). Springer Science and Business Media Deutschland GmbH.