DronePose

Photorealistic UAV-Assistant Dataset Synthesis for 3D Pose Estimation via a Smooth Silhouette Loss

Abstract

In this work we consider UAVs as cooperative agents supporting human users in their operations. In this context, the 3D localisation of the UAV assistant is an important task that can facilitate the exchange of spatial information between the user and the UAV. To address this in a data-driven manner, we design a data synthesis pipeline to create a realistic multimodal dataset that includes both the exocentric user view, and the egocentric UAV view. We then exploit the joint availability of photorealistic and synthesized inputs to train a single-shot monocular pose estimation model. During training we leverage differentiable rendering to supplement a state-of-the-art direct regression objective with a novel smooth silhouette loss. Our results demonstrate its qualitative and quantitative performance gains over traditional silhouette objectives.

Overview

In-The-Wild (YouTube) Results

|

|

|

|

Loss Analysis

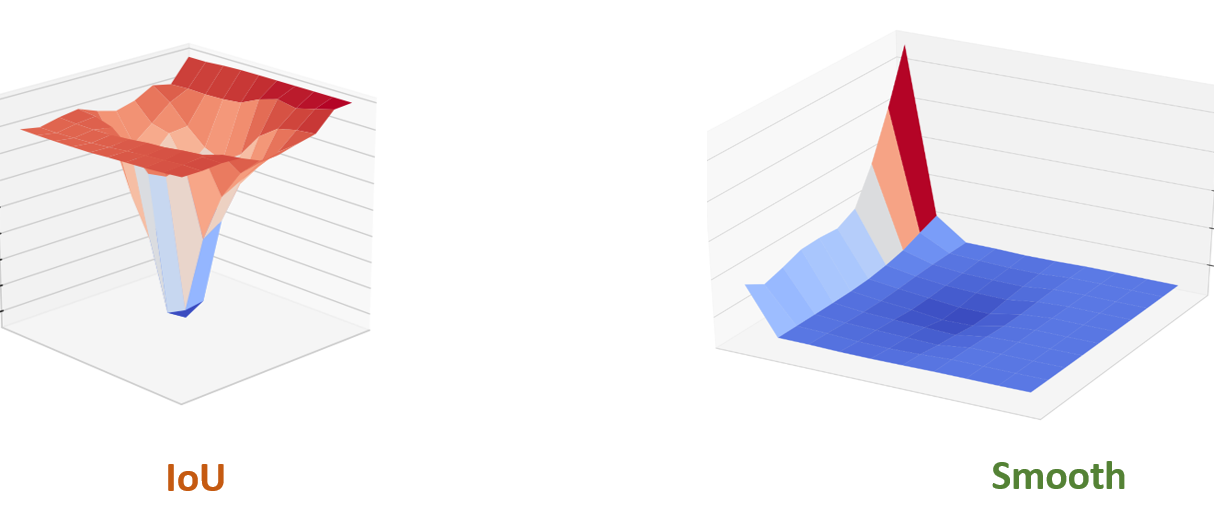

Our quantitative results demonstrate that the addition of a complementary silhouette-based supervision through a differentiable renderer, enhances overall performace. Yet we also present an analysis with respect to the traditional IoU silhouette loss and our proposed smoother objective. Through the following multi-faceted analysis we conclude that the smoother loss variant exhibits more robust inference performance.

Loss Landscape

The following loss landscape analysis shows a 3D visualization of the loss surfaces from an IoU supervised model in comparison to the smooth silhouette loss. The IoU trained model has noticeable convexity in contrast to our proposed smooth loss.

|

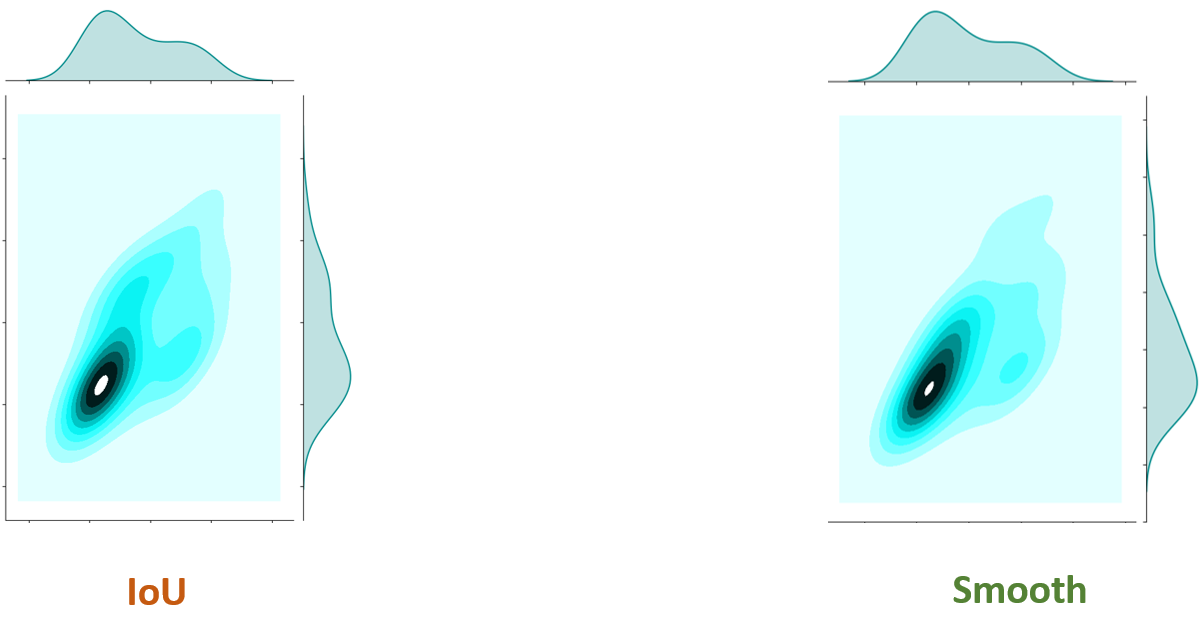

Loss Distribution

The following kernel density plots show the loss evaluations across a dense sampling of poses around the same rendered silhouette. It illustrates how the proposed loss offers a smoother objective that contains a better defined minima region.

|

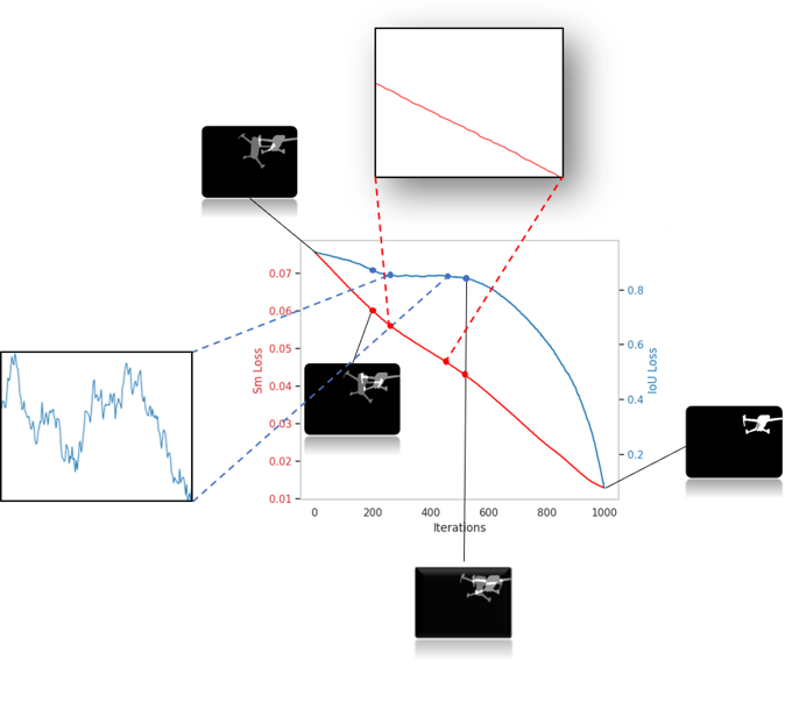

Pose Interpolation

In this experiment, an interpolation from a random pose towards the groundtruth pose is presented (x-axis), along with the sampled loss function value (y-axis). The sensitivity of IoU is evident compared to the smoother behaviour of our proposed silhouette loss function.

|

|

Unseen Data Qualitative Performance

Finally, the following in-the-wild qualitative results, showcase that a model trained with the smoother objective (red overlay), offers consistent predictions across time compared to one trained with a typical IoU silhoutte loss (blue overlay). This qualitative comparison in unseen real data highlights the differences between these models, which nonetheless, provide similar quantitative performance on our test set.

|

|

Data

The data used to train our methods is a subset of the UAVA dataset. Particularly, we used only the exocentric views that can be found in the following Zenodo repository.

The data can be downloaded following a two-step proccess as described in the corresponding UAVA dataset page.

Publication

Authors

Georgios Albanis *, Nikolaos Zioulis *, Anastasios Dimou, Dimitrios Zarpalas, and Petros Daras

Citation

@inproceedings{albanis2020dronepose,

author = "Albanis, Georgios and Zioulis, Nikolaos and Dimou, Anastasios and Zarpalas, Dimitris and Daras, Petros",

title = "DronePose: Photorealistic UAV-Assistant Dataset Synthesis for 3D Pose Estimation via a Smooth Silhouette Loss",

booktitle = "European Conference on Computer Vision Workshops (ECCVW)",

month = "August",

year = "2020"

}

Acknowledgements

This project has received funding from the European Union’s Horizon 2020 innovation programme FASTER under grant agreement No 833507.

|

|