Data Recording

This section describes how to use VolCap to record multi-view RGB-D data in various deployment settings.

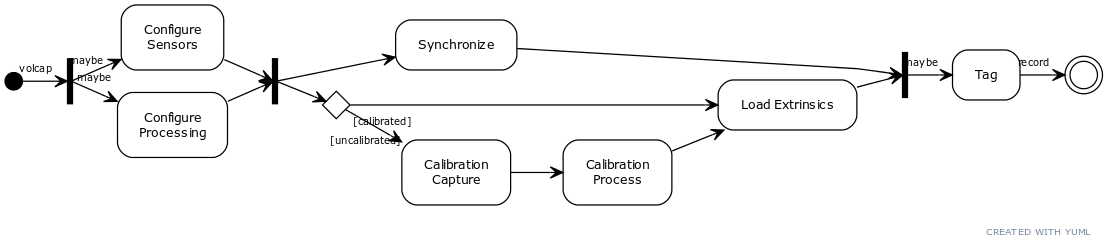

The overall recording process depicted above:

- Starts by firing up

volcap.exe(with the appropriate arguments) - Optionally configuring sensor or processing parameters

- Synchronizing the Eyes

- Calibrating the Eyes:

- If a calibration has already been performed and the sensors have not been moved, then loading the latest existing calibration information suffices.

- Otherwise, calibration data need to be first captured, and then processed, with the new latest results loaded into the system

- Then, users can optionally tag the recording using the corresponding input text widget, and then press the recording button to start recording.

If the sensor processing units are Intel NUCs and the installation has also configured the LED utilities, then for each distinct step, the NUC’s LEDs will be colored accordingly:

| IDLE | CONNECTED | CALIBRATING | RECORDING |

|---|---|---|---|

|  |  |  |

Four (4) Kinect 4 Azure Devices

Initialization

When starting VolCap all the available devices should be automatically displayed on the Devices widget. The user should just check the devices to be connected, (optionally, select a device as the master for hardware synchronization) and hit the connect button. Each device stream will be displayed in a dedicated window with a distinct color.

Calibration

Before recording a sequence the system must be calibrated. The user should assemble the calibration structure in the middle of the capturing space, (optionally, change the calibration settings if needed, we usually use 25 inner and 20 outer iterations), capture RGB-D frames, and hit the Process option in the Calibration menu.

Preparing and Recording

After calibrating the system, the user should synchronize the devices with the main workstation (see the Synchronization section). Additionally, it is a good practice to reset the Exposure and the Color Gain settings for all the devices, in order to get consistent results betweeen the devices.

Three (3) Kinect 4 Azure Devices

It is also possible to connect and calibrate K devices, with K >= 3. Just follow all the standard steps (described above and in Section Volumetric Capture).

Admittedly, it is harder to calibrate with K = 3 devices (in the first setting of issue #29). However, with a little effort it is still possible.

Six (6) Kinect 4 Azure Devices

You can connect more devices if you need, however you should consider bandwidth issues that may appear (and choose a lower quality profile when connecting the devices)

Eight (8) mixed devices with six (6) Kinect 4 Azure and two (2) Intel RealSense D415 Devices

You will observe differences between the different types of sensors, so care should be taken when recording in mixed mode. You should modify each device settings (and maybe the environment’s lighting) in order to achieve similar color response results between each view.

Recording Extraction

After the recording session is completed (as described above), the user can save data from distrinct frames of each recorded file (described in the Volumetric Snapshot section). User can save the recorded depth and color (optionally, undistorted if the corresponding option is selected before exporting starts), and pointcloud data, with and without color per vertex (note that, the pointclouds will be undistorted if such an option is selected), and finally, if the calibration option is selected, the exported pointclouds with aligned to the global coordinate system. Once the exporting is completed, the output directory should look like this:

depending on the exporting options selected.

These folders contain the corresponding data, with the group_id, device_nameand frame_id contained in the filename that is formated as:

{group_id}_{device_name}_{data_type}_{frame_id}.ext.

Color,depthandpointclouddata from the first group (0) of a dumped recording.

Depth images can be previewed on any program supporting the .pgm file format visualization (e.g. InfranView), and pointclouds can be visualized on any 3D visualization program supporting .ply file format (e.g. Meshlab).

Here we show the calibrated merged views of colored pointclouds, as exported by volsnap.exe.